Which novel layer module was introduced in MobileNetV2?

The inverted residual with linear bottleneck.

How does the convolutional module in MobileNetV2 reduce the memory footprint?

By only having large tensors in an intermediate state.

Large tensors are only used inside the inverted residual with linear bottleneck. Therefore they don't need to be stored in main memory, as would be the case in a module where the large tensors are used in for example a residual connection.

When is an inverted residual block (MobileNetV2) conceptually the same as a classical residual block?

When the expansion factor is smaller than 1.

Which activation function is used in MobileNetV2?

RELU6

What is the reason RELU6 is used in MobileNetV2?

Because of its robustness when used with low-precision computation

How many multiply-adds and parameters does the default MobileNetV2 have? And how much accuracy does it get on ImageNet?

300M MAdds and 3.4M parameters, with an accuracy of 72% on ImageNet.

What is changed from SSD in SSDLite?

All regular convolutions are replaced by separable convolutions.

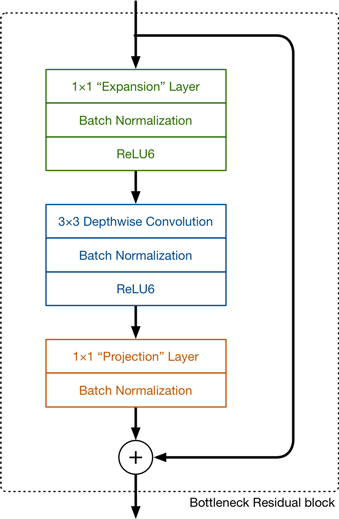

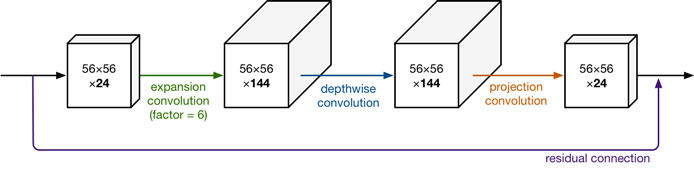

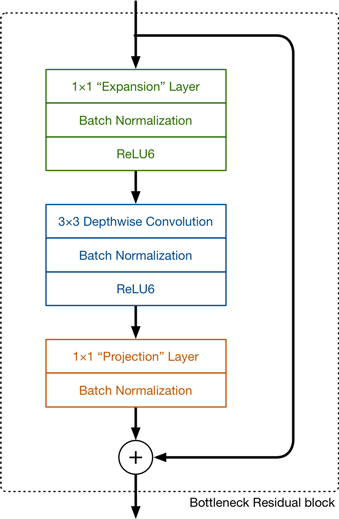

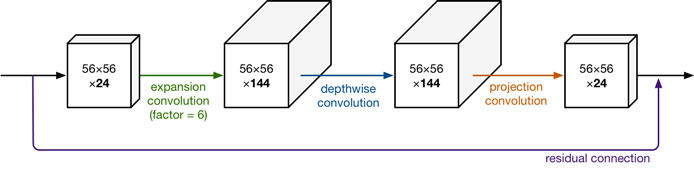

What does the main building block of MobileNetv2 look like:

The linear bottleneck with inverted residuals block:

For example:

"The manifold of interest should lie in a low-dimensional subspace of the higher-dimensional activation space"